AI systems for detecting cyber attacks

Key insights and lessons learned from the research

In this article, we will explore -using a concrete example- the main techniques that AI systems can use to detect cyber attacks, highlighting the pros and cons when faced with an intelligent adversary.

In the previous article, we discussed the concept of intelligence, presented standardized definitions of artificial intelligence (AI) systems, and highlighted that in certain areas, such as cybersecurity, it is necessary to design AI systems to take into account the potential moves of an intelligent adversary. Now, let's see how this is possible.

How can we build AI systems capable of operating in adversarial environments?

The key is to design systems so that their functionality and performance are resilient to data manipulation by an intelligent adversary. This is crucial when using machine learning, as this mechanism is intrinsically designed to adapt a model based on data. This may seem like a hopeless problem, but there are practical and effective engineering solutions.

Let's consider the case of AI systems applied to computer intrusion detection (IDS). A first key point is to ensure that the system relies on truly discriminating information that distinguishes an attack from a legitimate instance. By discriminating, I mean that, for an adversary, modifying this information can weaken an attack or make it impossible altogether. These are characteristics that must be present in the data for an attack to be effective or truly successful. Modifying this data therefore has a cost for the adversary.

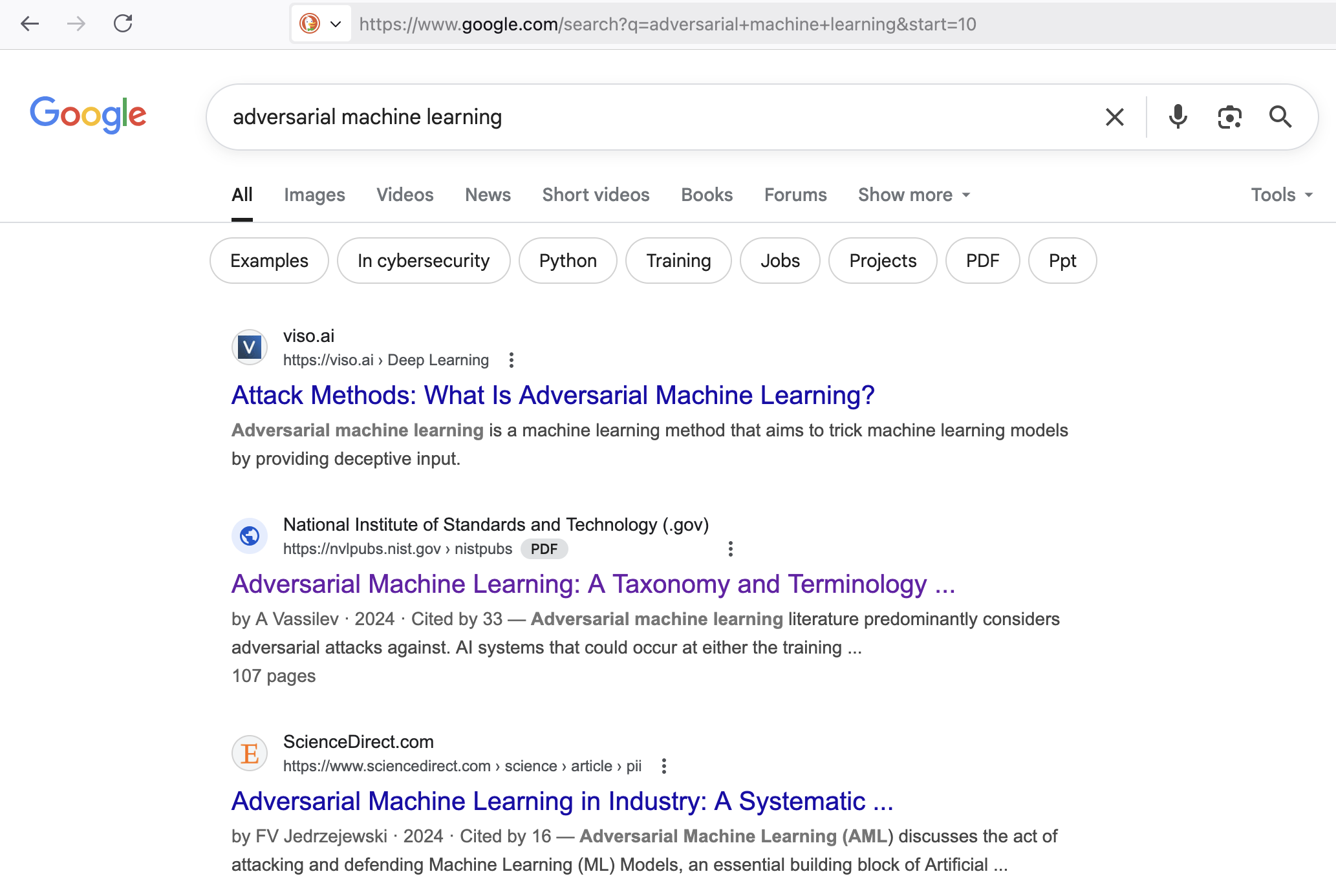

Let's take a very simple example. Consider the Google web application that provides search results (https://www.google.com/search). It receives the search query via the q parameter and the starting result number via the start parameter (results are limited to 10 for each page). For example, to obtain results associated with the search "adversarial machine learning" starting from the tenth (start=10), the URL is as follows (spaces, not allowed in URLs, are encoded with a + sign):

https://www.google.com/search?q=adversarial+machine+learning&start=10

Try changing the start value in your browser's address bar, for example by setting the value 20, 30, etc., you will see that the Google results page will be different.

In general, anyone can insert an arbitrary value for any application input. For example, an attacker could insert values (payload) to check for a possible SQL injection vulnerability, ranked third in the OWASP TOP 10 most common vulnerabilities, like this (start=AND 1):

https://www.google.com/search?q=adversarial+machine+learning&start=AND+1

Now suppose you want to detect this type of attack.

For example, you could collect a list of the most common SQL injection payloads and compare them with the input data for the attribute. This brute-force approach would work, but only for attacks strictly within this list. It might be quite easy for attackers to find a payload you don't have in your list and successfully carry out the attack without being detected. For example, they could do this automatically with the leading sqlmap tool. Furthermore, detection would have a computational cost that would increase with each new payload (since the input would have to be compared with each element of the list for each request).

A more effective approach might be to train a machine learning model based on a list of typical SQL injection payloads, as done here. In this case, the model would also be able to detect new payloads, never seen during training, but with similar characteristics. Let's say it is able to detect all automated SQL injection attempts by tools like sqlmap. The cost to an adversary would be greater than in the previous case of brute-force detection. Detection capabilities would still be limited to these types of attacks, but countless other types of injection exist, such as XML, XPath, command, CRLF, and cross-site scripting.

At this point, you're determined. For each known attack category, deploy a machine learning/AI-based classifier. The cost for attackers should be greater than in the previous case: to evade detection, they'd have to devise an attack not covered by the classifiers. Your system might work well, but the computational cost would increase significantly depending on the number of classifiers that, simultaneously or in sequence, would have to analyze the attribute values. Furthermore, the probability of false alarms would increase as the number of classifiers used increases (each could independently make an incorrect prediction). In any case, you would only be able to detect the known attacks you've considered, and you would be exposed to new types of attacks (for example, those that exploit zero-day vulnerabilities).

Even a simple manipulation like this, with a negative value (start=-10):

https://www.google.com/search?q=adversarial+machine+learning&start=-10 would go unnoticed.

What to do then?

So far, we've given examples of a signature-based detection technique for known attacks, known as misuse-based. It's also widely used in antivirus/antimalware software, and is the main reason why they must be constantly updated. Defenders have an inherently disadvantage here, as they are defenseless against attack variants sophisticated enough to evade detection or new types of attacks. Furthermore, the training data is generated by the attackers themselves, who can potentially manipulate it at will to frustrate or compromise an AI system's machine learning. An example from a few years ago can be found here.

However, there is a complementary technique, more powerful and robust when faced with an adversary (if properly designed): the one based on anomalies, known as anomaly-based.

The anomaly-based detection technique

In the case in question, we could collect all the values received for the start attribute and outline the statistics of the observed data type. We would notice that in most cases start receives an integer, and we could assume this data type is normal or legitimate. We could even establish the minimum and maximum acceptable values for the integer. Any deviation from this pattern would raise alarm bells. It is possible to build an AI system that completely automates this operation. At this point, the chances for the attacker to perform any injection attack without being identified are practically nil. In fact, it is always necessary to insert some unexpected character: this is what I meant by discriminating information. The cost of any manipulation for the attackers is maximum: by sending an integer in the range of expected values (automatically learned by the algorithm), they are simply unable to perform an attack that leverages any incorrect input validation.

Using this technique, we focus on what is normal and can detect any attack, known or unknown, as an anomaly—a deviation from normality. The basic assumption—typically verified—is that the normal/typical traffic profile is legitimate. The result is a very simple yet extremely effective model.

In our example, we expect most of the traffic to come from legitimate users, using the Google service as expected, directed by the service itself through user interfaces.

What if this is not the case?

This is certainly a possibility. To make learning robust, you can, for example:

- select the traffic used for learning (for example, considering only that of users registered on the system);

- provide limits on the influence that each traffic source (e.g. each registered user) can have on the learned model;

- establish whether it is actually possible to identify a biased (normal) profile in traffic;

- use predefined models that incorporate a priori knowledge of the problem (from an expert) and robust statistical inference techniques;

This way, an attacker can have very limited influence on the training and the detection can be very robust and efficient.

Be careful: design choices that do not consider an adversarial environment can lead to incredibly vulnerable algorithms, where the attacker can create huge variations in the model with very little traffic.

Conclusions

In this article, we explored, with a concrete example, the main techniques available for detecting cyber attacks and highlighted the advantages of anomaly-based detection when faced with an adversary. In reality, both techniques are useful and complementary: they should always be combined appropriately so that AI systems can provide information on the type of attack (misuse-based) and detect new attacks (anomaly-based). The key objective is to build detection systems that focus on and rely only on invariant attack characteristics, which must be present for them to be successful.

Find more blog posts with similar tags